# Image Classification

# CNN

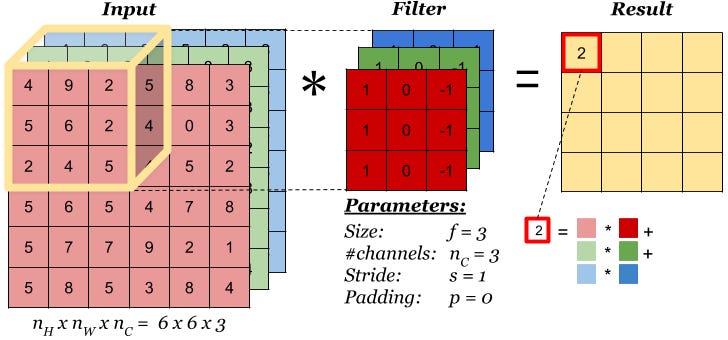

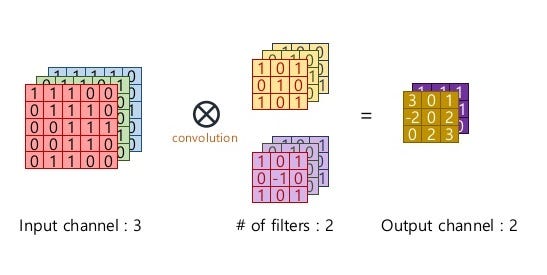

Parameter calculation

input dimension: (I_w, I_h, n_channel). When n_channel = 1, it is a 2D inputkernel dimension: (K, K, n_channel). Kernel is not always a square, it can be (K_w, K_h, n_channel)output size:output dimension: (O, O, output_channels)

Ways of improving accuracy

- wider: increase number of output channels

- deeper: increase number of neural networks

- resolution larger: increase input size

# ResNet

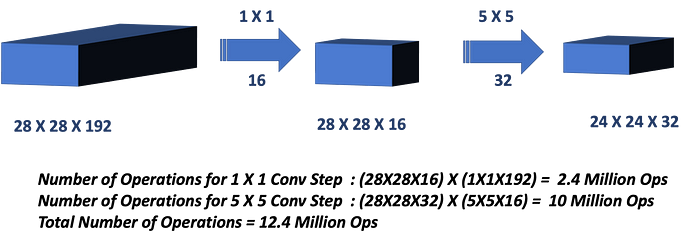

conv1x1

- Dimensionality reduction/augmentation

- Reduce computational load by reducing parameter map

- Add non-linearity (RELU)

Batch normalization

I: inputO: outputb,c,x,y: batch, channel, width, heightu, sigma:- if training, computed from batch

- if testing,

- using average of whole training set

- a moving average calculated during training by:

running = 0.1*running + 0.9 * sample

Origin

deeper is better:

- but gradient vanishing or exploding when layer is deeper[the input x is one of the term of gradient chain]

- but poor activations like sigmoid

- gradient vanishing: at some time when intermediate input too large or too small

- gradient vanishing: derivative is at most 0.25, the front layer's gradient is chain product of later ones, some 0.1s' product goes to 0 very quickly [to alleviate, use modified version:

1 / (1 + exp(-ax))with largera]

- but poor activations like tanh [gradient vanishing at some time when intermediate input too large or too small]:

- but poor activations like relu

- gradient vanishing: negative [to alleviate, use LeakyReLU, but lose some non-linearity]

- gradient exploding: positive, return 1 and by multiplying other terms in gradient chain, boom!

weight initialization matters:

- random normalization or uniform, weight cannot be too small or too large, thus each output's variance should keep the same

- weight too large(then input too large) => relu is okay, but others produce vanishing gradient

- weight too small(then input too small) => all produce vanishing gradient [1. intrinsic reasons; 2. small x in a term in gradient chain]

- xavier + tanh, he + relu

- variance is not strictly the same, but better

- complexity of finding optima increases => convergence is slower, and learning rate should be small

- random normalization or uniform, weight cannot be too small or too large, thus each output's variance should keep the same

larger learning rate is preferred:

- faster convergence

- less likely to get stuck in sub minima

- error term's mean is 0(gradient is linear form of x), and variance is bounded by learning rate. Larger lr adds more noise.

batch normalization

- better variance control than xavier and he

- normalizes the exploding weights

- remove unimportant info in batch, height, width dimension as it normalizes in different channels

- less info results in faster convergence and simplicity in fitting

- enables larger learning rate for better performance[convergence and optima]

references

Residual block + skip connection

- if input dimension the same as residual block's output dimension(left), the skip connection:

X_skip = X, is a identity block - otherwise(right), the skip connect block is a

conv1x1 + bn block - skip connection mitigates vanishing gradient, as the input is forwarded

- there are many variants for residual blocks

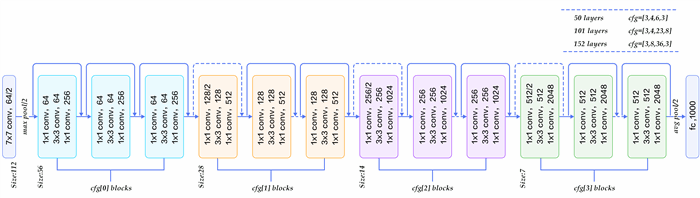

5 stages

- 1st stage: 3 paddings for up, bottom, right and left

- 1st stage's max pooling: 1 paddings

- other padding options:

VALID: no paddingSAME: evenly left and right, if the padding number is odd, the extra is put at the right to make input and output size the same (ignoring stride)

- blue(2nd), yellow(3rd), pink(4th), green(5th)

- 2nd to 5th stages uses

SAMEpadding - every blue, yellow, purple and green block below is a

residual block + skip connection - from 2nd to 5th stages, every

residual block + skip connectionis repeated in some defined times (cfg) - full line: skip connection uses identity block

- dotted line: skip connection uses conv1x1+bn block

- average pooling: 1x1

- 112 => 56 => 28 => 14 => 7 => 2048 => 1000

# Class activation map

Global activation pooling:

- regularization: reduce dimension, drop information and avoid overfitting

- localization

Produce class activation map:

- adds a gap between last conv and fc

- compute weighted linear sum

wis from fc layerfkis from conv layer's k kernel

# EfficientNet

# 模型结构

图例:

EfficientNet-B0结构图:

EfficientNet-B0是用强化学习的方式通过神经网络架构搜索设计得到的。学习时的优化目标为:

T: 目标计算量w: -0.07,超参系数,用来衡量精度和计算量的权重。m: 模型网络

MBConv: 移动翻转瓶颈卷积 (Mobile inverted Bottleneck Convolution), 作为EfficientNet的核心模块,该模块是对其他卷积网络模型提出的各种模块的组装,其内部可拆分成4个子结构:- 深度可分离卷积 (depthwise separable convolution), 该结构借鉴于Xception。该结构将原始的卷积操作分成了两步:深度卷积 (depthwise convolution) 和 逐点卷积 (pointwise convolution)。深度卷积时,核尺寸正常,但是一个卷积核 (kernel)只负责一个通道。逐点卷积的核尺寸是1x1,一个卷积核负责所有通道。与深度卷积相反,逐点卷积实现了跨通道信息的利用,两者结合使用之后实现了类似原始卷积操作的效果,并且大大减少了参数数量。

- 翻转残差 (inverted residual block),该子结构借鉴于MobileNetV2,通常与深度可分离卷积结合使用。残差模块会将输入和卷积结果进行跳越连接。原始的残差模块,例如ResNet中的模块,是两头通道维度大,中间通道维度小。 原始残差模块通过减少中间通道的维度来减少参数数量。翻转之后的模块首先对通道进行了扩展 (expansion) 操作, 即对输入进行1x1的逐点卷积并根据扩展比例(expand ratio)改变输出通道维度(如扩展比例为3时,会将通道维度提升3倍。但如果扩展比例为1,则直接省略该1x1的逐点卷积和其之后批归一化和激活函数),因为后续进行深度卷积而不是原始卷积,所以参数并不多,因此通道维度并不需要减少,最后形成了两头通道维度小,中间通道维度大的结构。甚至由于深度卷积减少的参数过多,最后一次卷积时为了减少信息进一步损失, 翻转残差并没有启动激活函数。

- 压缩激发 (squeeze and excitation block),借鉴于SENet。该结构类似于注意力机制,通过特征图 (feature map) 为自身学习一个特征权值,通过单位乘的方式得到一组加权后的新的特征权值,以此来实现对每个通道赋予不同权重。

- 随机深度 (stochastic depth), 借鉴于优化版的ResNet。深层网络学到的信息并不一定全部有效,因此很容易过拟合。于是一种dropout的思想在2012年由Hinton提出。Dropout时,会摒弃一些神经元的连接,减少神经元之间的相互作用。因为dropout是随机的,所以训练时还获得了很多版本的简化模型,最后预测时实现了多个简化模型的融合,提升了网络的泛化能力。随机深度与dropout类似,该结构会按一定概率跳过一整个残差模块,使网络获得随机深度,以此来缩短模型训练时间并且增强网络的泛化能力。

Swish: 是谷歌大脑团队基于强化学习搜索到的非单调的激活函数,被证明在某些情况下比ReLU更优。

# 复合缩放

为了获得更好的精度,放大卷积神经网络是一种广泛的方法。然而,放大卷积神经网络的过程从来没有很好的理解过,目前通用的几种方法是放大卷积神经网络的深度、宽度和分辨率:

- 宽度: 增加卷积网络的通道维度,更宽的网络能够捕获更细粒度的特征

- 深度:增加卷积网络的深度,即卷积层的数量。更深的网络可以捕获更丰富和更复杂的特征,但是由于梯度弥散、梯度爆炸等问题,训练难度也会增大。

- 分辨率:增加输入图片的分辨率,更清晰的图片往往会有更细粒度的特征

在之前都是单独放大这三个维度中的一个,但是单独放大某个维度很容易出现准确率饱和的情况,如下图所示:

尽管任意放大两个或者三个维度也是可能的,但是任意缩放需要繁琐的人工调参同时可能产生的是一个次优的精度和效率。因此EfficientNet系列提出了复合缩放 (compound scaling) 的算法, 先确定基础网络,然后通过 AutoML 的方式网格搜索三个维度的缩放比例,使用者可以根据自己的需求和硬件资源,按照已经搜索到的缩放比例,使用一个复合系数Φ成倍的缩放基础网络来获得适合自己的网络。

因为卷积神经网络可以视为几个stage的组合,每个stage重复不同的次数,而每个相同的stage的重复block都有相同的结构,所以卷积神经网络的函数可以定义如下:

i: stage 索引F: 残差模块卷积操作X: 输入L: 残差模块重复次数H, W, C: 高度,宽度,通道数

通过AutoML搜索超参: d, w, r, 在给定硬件资源的条件下,最大化精度:

其中d是深度缩放系数,w是宽度缩放系数,r是分辨率缩放系数。加倍深度,FLOPS也加倍,加倍宽度或分辨率,FLOPS变四倍,这里限制放大倍数的乘积约等于2,这样后续放大的时候,给定任意Φ, FLOPS总会被约束在 2^Φ。

基础网络是EfficientNet-B0时:

d: 1,2w: 1.1r: 1.15

# EfficientNet preparation

- create conda environment

conda create -n fc pip=19.1.1 cudatoolkit=10.1 cudnn=7.6.5 cupti=10.1 - install tensorflow 2.2 (tf2.2 only supports cuda 10.1)

pip install tensorflow==2.2.0 (gpu enabled) # or conda install -c conda-forge tensorflow=2.2.0 (search for tensorflow-gpu first)TIP

To verify if tensorflow gpu version is installed correctly,

tf.config.experimental.list_physical_devices('GPU')ortf.test.is_gpu_available() - download tensorflow models from git:

git clone https://github.com/tensorflow/models.git - add tensorflow models to Python path as if the models were installed as packages (export for effect on every process. without export, only for current shell itself.)

export PYTHONPATH=$PYTHONPATH:/path/to/models - intall all dependencies

pip install -r requirements.txt - if using gpu, to solve issue of ptxas

ln -s /usr/local/cuda/bin . # or, ln -s /usr/local/cuda/bin/ptxas /storage/usr/miniconda3/envs/env_name/bin/ptxas # to remove unlink bin - prepare data

- tfds:

python -m tensorflow_datasets.scripts.download_and_prepare --datasets=official datasets - tfrecord:

python imagenet_to_gcs.py --raw_data_dir=$IMAGENET_HOME --local_scratch_dir=$IMAGENET_HOME/tf_records --nogcs_upload

folder structure

Default:

- $IMAGENET_HOME:

train/class1/file1;train/class2/file1;

validation/file1; validation/file1; synset_labels.txt (class1\n class2\n) Modified: - $IMAGENET_HOME:

train/class1/file1;train/class2/file1;

validation/class1/file1; validation/class2/file1;

- tfds:

- run

- models saved in

meta,indexanddatafiles

TIP

meta for graph definition; index for variable table; data for variable values.

- use tensor-board to view training statistics

- models saved in

.pb,variablesandassets

TIP

.pb is similar as meta; variables save the data; assets save other files;

- models saved in

- miscellaneous

- check cuda version:

cat /usr/local/cuda/version.txt

- check cuda version: